- Home

- About Journals

-

Information for Authors/ReviewersEditorial Policies

Publication Fee

Publication Cycle - Process Flowchart

Online Manuscript Submission and Tracking System

Publishing Ethics and Rectitude

Authorship

Author Benefits

Reviewer Guidelines

Guest Editor Guidelines

Peer Review Workflow

Quick Track Option

Copyediting Services

Bentham Open Membership

Bentham Open Advisory Board

Archiving Policies

Fabricating and Stating False Information

Post Publication Discussions and Corrections

Editorial Management

Advertise With Us

Funding Agencies

Rate List

Kudos

General FAQs

Special Fee Waivers and Discounts

- Contact

- Help

- About Us

- Search

The Open Medical Informatics Journal

(Discontinued)

ISSN: 1874-4311 ― Volume 13, 2019

Usability Laboratory Test of a Novel Mobile Homecare Application with Experienced Home Help Service Staff

I Scandurra*, 1, M Hägglund1, S Koch1, 2, M Lind3

Abstract

Using participatory design, we developed and deployed a mobile Virtual Health Record (VHR) on a personal digital assistant (PDA) together with experienced homecare staff. To assess transferability to a second setting and usability when used by novice users with limited system education the application was tested in a usability lab. Eight participants from another homecare district performed tasks related to daily homecare work using the VHR. Test protocols were analyzed with regard to effectiveness, potential usability problems and user satisfaction. Usability problems having impact on system performance and contextual factors affecting system transferability were uncovered. Questionnaires revealed that the participants frequently used computers, but never PDAs. Surprisingly there were only minor differences in input efficiency between novice and experienced users. The participants were overall satisfied with the application. However, transfer to another district can not be performed, unless by means of careful field observations of contextual differences.

Article Information

Identifiers and Pagination:

Year: 2008Volume: 2

First Page: 117

Last Page: 128

Publisher Id: TOMINFOJ-2-117

DOI: 10.2174/1874431100802010117

Article History:

Received Date: 28/4/2008Revision Received Date: 19/6/2008

Acceptance Date: 7/7/2008

Electronic publication date: 22/8/2008

Collection year: 2008

open-access license: This is an open access article licensed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/3.0/) which permits unrestricted, non-commercial use, distribution and reproduction in any medium, provided the work is properly cited.

* Address correspondence to this author at the Centre for eHealth, Uppsala University, Uppsala, Sweden; E-mail: Isabella.Scandurra@ehealth.uu.se

| Open Peer Review Details | |||

|---|---|---|---|

| Manuscript submitted on 28-4-2008 |

Original Manuscript | Usability Laboratory Test of a Novel Mobile Homecare Application with Experienced Home Help Service Staff | |

INTRODUCTION

Homecare professionals move frequently during their daily work and are in great need of mobile electronic support to improve information access, information sharing and communication within the broader healthcare team.

A disadvantage in homecare of elderly patients is that temporary staff are common and limited resources are available for education of these new recruits before commencing work. Education in using information and communication technology (ICT) is normally not prioritized. Limited time resources within homecare also put constraints on the time the staff can spend using ICT. Therefore, it is important that they can perform daily activities, supported by a mobile electronic tool, within the limited timeframe.

To meet the needs of the care professionals in homecare of the elderly we developed a prototype Virtual Health Record (VHR) in the action research project OLD@HOME [1Koch S, Hägglund M, Scandurra I, Moström D. Old@Home - Technical support for mobile closecare, Final report 2005 Report No.VR 2005: 14 http:// www.medsci.uu.se/mie/project/closecare/vr-05-14.pdf 2005. last visited June 19], using a method based on established theories from the fields of participatory design [2Schuler D, Namioka A, Eds. Participatory Design - principles and practices. New Jersey: Lawrence Erlbaum Associates 1993., 3Greenbaum J, Kyng M. Introduction: Situated Design In: Greenbaum JKM, Ed. Design at work: Cooperative design of computer systems. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc 1992; pp. 3-24.] and computer supported cooperative work [4Luff P, Heath C. Mobility in collaboration In: Poltrock S, Grudin J, Eds. In: Proceedings of the 1998 ACM Conference on Computer Supported Cooperative Work; 1998; November 14 - 18, 1998; Seattle Washington, United States. New York: ACM Press 1998; pp. 305-14.-6Hardstone G, Hartswood M, Procter R, Slack R, Voss A, Rees G. Supporting informality: team working and integrated care records. Proceedings of the 2004 ACM Conference on Computer Supported Cooperative Work 2004; November 06 - 10, 2004; Chicago Illinois, USA. ACM Press 2004; pp. 142-51.].

PROTOTYPE –THE VIRTUAL HEALTH RECORD

The Virtual Health Record (VHR) was designed to address workflow issues, to improve communication and to bring key knowledge to the homecare staff at the point of care, i.e. in the homes of the elderly, using mobile electronic devices [1Koch S, Hägglund M, Scandurra I, Moström D. Old@Home - Technical support for mobile closecare, Final report 2005 Report No.VR 2005: 14 http:// www.medsci.uu.se/mie/project/closecare/vr-05-14.pdf 2005. last visited June 19].

The VHR was developed and tested in Hudiksvall, a small rural town in Sweden. It was used by three groups of care professionals, who were regularly involved in the homecare of elderly citizens. These groups were: (1) general practitioners (GP), (2) district nurses (DN) employed in primary care by the County Council of Gävleborg and (3) home help service personnel (HHS), mainly assistant nurses, employed by the municipality of Hudiksvall.

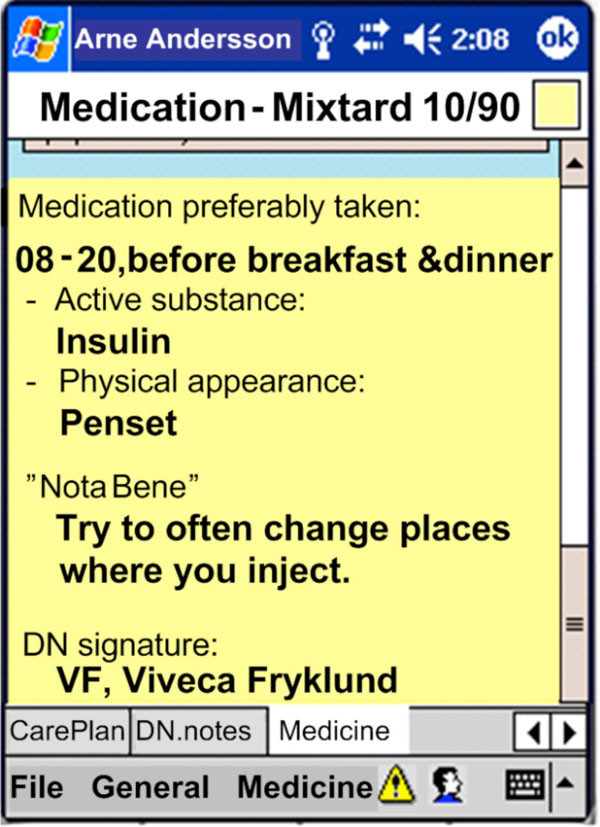

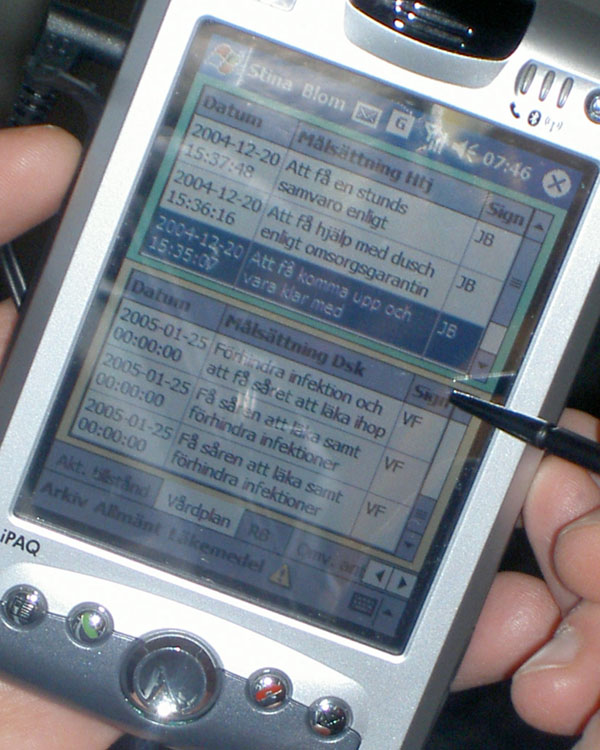

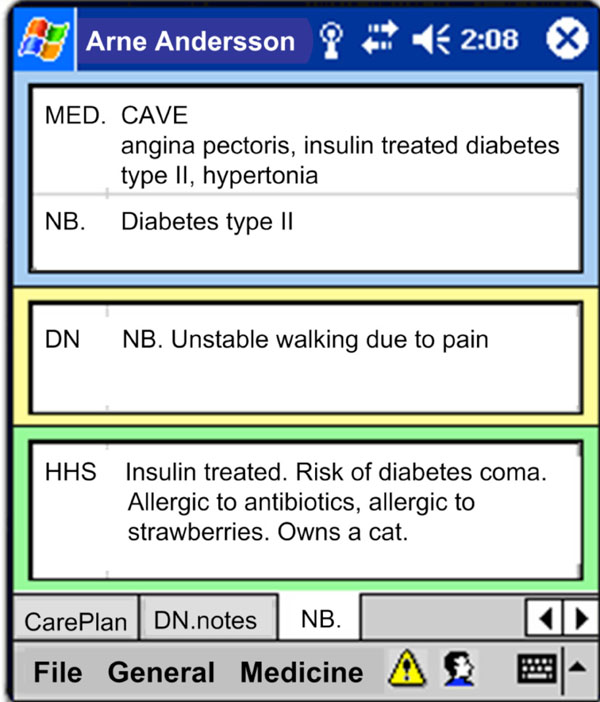

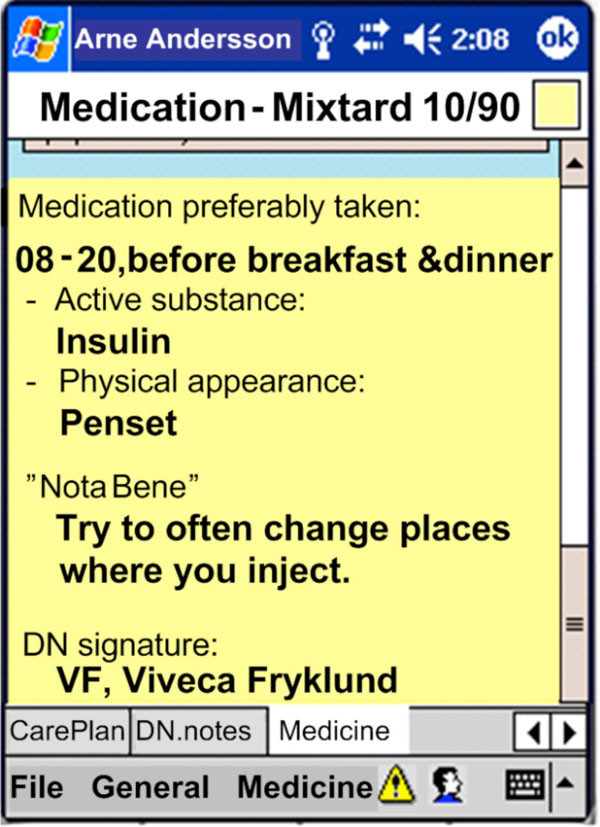

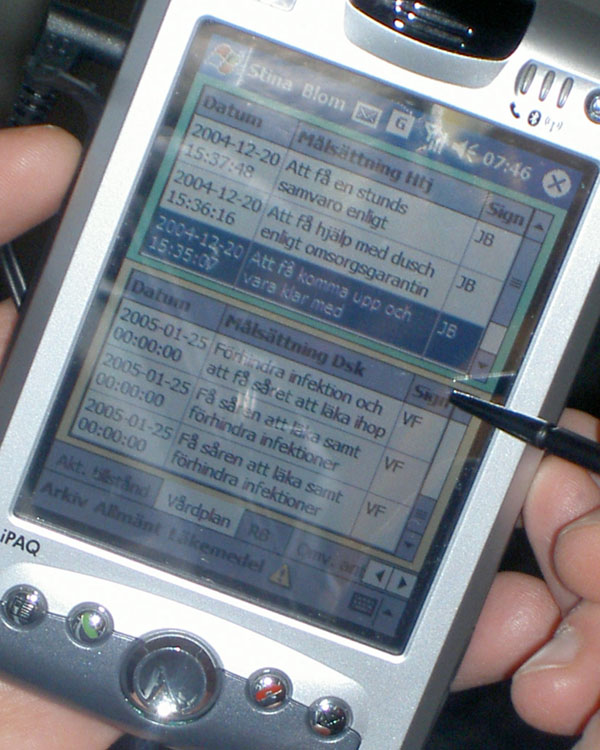

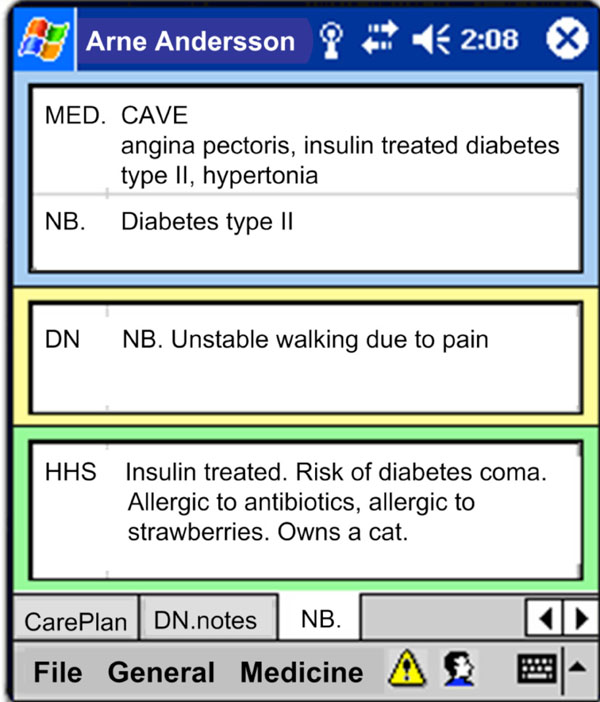

Using mobile electronic devices, such as personal digital assistants (PDAs) and tablet PCs, each care professional was able to access relevant patient information in profession-specific views from an integrated platform. This integrated platform incorporated a number of underlying feeder systems such as DN’s and GP’s electronic health records and HHS’ daily notes and care plans. Examples of patient data provided in the VHR include a modified prescription list for HHS, (Fig. 1 ); an integrated care plan for HHS and DN (Fig. 2

); an integrated care plan for HHS and DN (Fig. 2 ); risk factors (Fig. 3

); risk factors (Fig. 3 ); as well as daily notes and status updates from all feeder systems [7Scandurra I, Hägglund M, Koch S. Visualisation and interaction design solutions to address specific demands in Shared Home Care Stud Health Technol Inform 2006; 124: 71-6.]. In this study, focus was on evaluating the offline pocketPC application on a PDA used by HHS.

); as well as daily notes and status updates from all feeder systems [7Scandurra I, Hägglund M, Koch S. Visualisation and interaction design solutions to address specific demands in Shared Home Care Stud Health Technol Inform 2006; 124: 71-6.]. In this study, focus was on evaluating the offline pocketPC application on a PDA used by HHS.

|

Fig. (1) HHS prescription list. |

|

Fig. (2) An integrated care plan. |

|

Fig. (3) Display of various risk factors. |

PURPOSE AND HYPOTHESIS

The prototype is working adequately in the area where it was developed and properly introduced to the staff. However, a common disadvantage of action research projects is that initial solutions are limited to one test site and not generalizable [8Kjeldskov J, Graham C. A review of mobile HCI research methods In: Chittaro L, Ed. Mobile HCI. Udine, Italy: Springer-Verlag GmbH 2003; pp. 317-5.]. It is known that transferability to other contexts depends on their similarity to the original context in terms of key characteristics and aspects [9Boivie I. A Fine Balance - addressing usability and users' needs in the development of IT systems for the workplace http: //publications.uu.se/abstract.xsql?.dbid=5947: Uppsala University 2008. last visited June 19], as well as it is known that homecare in different Swedish districts is performed similarly. Therefore it would be of interest to test the transferability of this PDA solution as it is developed in an action research project and by definition neither generalizable nor transferable.

We hypothesized that the developed VHR can be transferred to a different homecare setting, and that novice users such as substitutes or new recruits, will be able to use the system with limited introduction.

The purpose of this study was therefore to investigate theusability of the VHR system following the International Organization for Standardization (ISO) standard 9241-11 [10ISO 9241-11, Ergonomic requirements for office work with visual display terminals, Part 11: Guidance on usability Geneva: International Organisation for Standardization 1998.]; when used by specific users, in this case first-time users from a HHS group, in a specific context, that is when newly introduced to the system, performing specifictasks that are relevant to their work situation. More specifically, the purpose was to:

- evaluate the effectiveness for relevant and frequent tasks for the participants when completing their daily work, and

- where effectiveness is low, identifypotential usability problems, seeking to improve the design and to

- obtain subjective user satisfaction measures.

Additional requirements from the homecare staff were that “the system should be easy to use and learn” and that “the tasks needed to be performed within a short time”.

MATERIALS AND METHODOLOGY

To date, there are few usability evaluations performed on mobile applications for homecare staff [11Koch S. Home telehealth - Current state and future trends Int J Med Inform 2006; 75(8): 565-76.]. To obtain objective measures and to collect subjective opinions we chose to assess this mobile VHR in a usability lab following the conventional lab test methodology as explained by Dumas and Redish [12Dumas J, Redish J. A practical guide to usability testing In: Exeter, UK: Intellect Books. 1999; p. 22 et sqq.]. In order to detect technical bugs, usability inadequacies, or difficulties relating to the interaction between users and their ICT, real work situations need to be reflected in the usability lab [13Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems J Biomed Inform 2004; 37(1): 56-76., 14Beuscart-Zephir MC, Brender J, Beuscart R, Menager-Depriester I. Cognitive evaluation: How to assess the usability of information technology in healthcare Comput Methods Programs Biomed 1997; 54(1-2): 19-28.]. To tailor the test to the homecare process, specific limitations were needed, as described in the sections below.

Test Participant Profile

Test participant profiles were created to model real users. A pre-requisite was that all HHS personnel had high domain knowledge and the relevant education necessary for assistant nurses. Since substitute similarity was required, we assured that the HHS personnel did not know the patient they were visiting (in the test).

Computer training is rarely available for homecare staff. Therefore, in this simulated real-environment test, no specific computer training was demanded, resulting in a potential range of computer skills from “novice” to “expert”.

Education of Participants

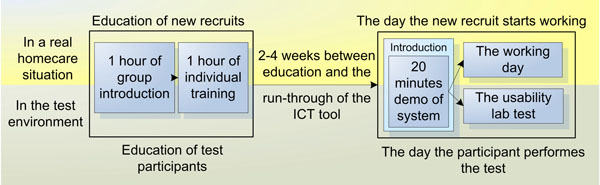

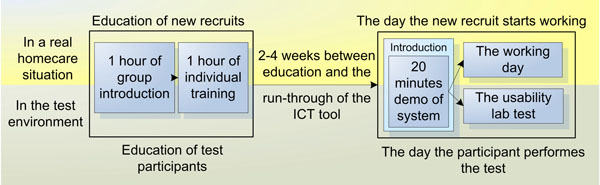

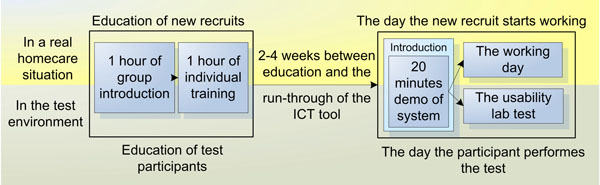

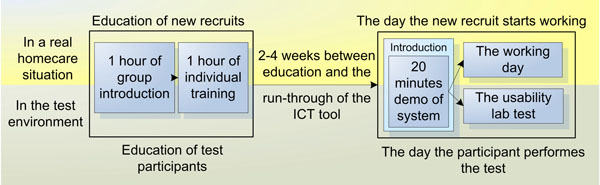

Design of the test strived to reflect a realistic situation for HHS, a correct context of use. Five managers in Elderly Care/Homecare in Sweden validated the proposed education for the participants as a realistic representation of the resources usually spent on educating substitutes (Fig. 4 ). According to these managers, the introduction for substitutes or new HHS personnel normally consists of two days of apprenticeship, where specific tools like an ICT system could occupy approximately two hours, one hour for a group introduction and one hour for individual education. The day a new recruit starts working, a colleague would give a run-through of the local work procedures, within which a maximum of 20 minutes would focus on the ICT tool.

). According to these managers, the introduction for substitutes or new HHS personnel normally consists of two days of apprenticeship, where specific tools like an ICT system could occupy approximately two hours, one hour for a group introduction and one hour for individual education. The day a new recruit starts working, a colleague would give a run-through of the local work procedures, within which a maximum of 20 minutes would focus on the ICT tool.

|

Fig. (4) A realistic amount of education was given to test participants, alike that provided for real homecare recruits. |

Consequently, education of the VHR system for the participants was conducted in the same way as the one for new recruits in a real homecare setting.

Two assistant nurses from the development site and experts on the system were invited to present the VHR and to describe the purpose of the system; how the VHR was used in practice; and specifically how the functions supported homecare work situations. This group introduction was held during an ordinary staff meeting and lasted for 30 minutes (Fig. 4 ). Another 30 minute presentation was given by the test coordinators to describe the usability lab and explain how the test was to be performed. Questions from the staff were answered and eight interested personnel voluntarily enrolled to participate in the test.

). Another 30 minute presentation was given by the test coordinators to describe the usability lab and explain how the test was to be performed. Questions from the staff were answered and eight interested personnel voluntarily enrolled to participate in the test.

In groups of two beginners supported by one of the experienced assistant nurses the entire system was walked through in an hour. The experienced user described each function and design solution thoroughly. Every new user followed a number of scenarios to perform daily tasks using the PDA. While sitting next to a colleague the new users either asked each other or got support on how to solve the task from the experienced user. According to interviews, this 2-on-1 education corresponded to the education which was likely to be given to new or substitute homecare personnel in a real situation and kept within the feasible timeframe suggested by the managers. This setting was conducive to the “natural learning mode” and therefore worked well. The participants were relaxed and comfortable. For this test, no other PDA or previous ICT training was required.

Test Procedure

Eight participants were sequentially invited to the Usability Lab at the Department of Information Science, Uppsala University in Uppsala. Upon arrival, the participant was greeted and introduced to the lab. She was informed about her rights and thereafter signed an approved consent document. A questionnaire providing background information about the participant was also completed. Prior to the test, the participant was given a run-through of the system. Usually, there are two reasons for providing education during a usability test: (1) to ensure that all participants have the same level of skill or knowledge before they begin the tasks, or (2) to provide some participants with education that others do not get [12Dumas J, Redish J. A practical guide to usability testing In: Exeter, UK: Intellect Books. 1999; p. 22 et sqq.] (p. 212). In this case, the purpose was to simulate the first working day, as it would start for a substitute in homecare (Fig. 4 ). The run-through of the application and the device lasted 20 minutes. All participants underwent the same demonstration.

). The run-through of the application and the device lasted 20 minutes. All participants underwent the same demonstration.

While performing the test, the participant followed 14 cue cards containing tasks (see Table 1 and the section below). The participant was asked to read the task out loud and then indicate when she found the correct itinerary on the PDA to complete the task, or when she thought correct itinerary was found, i.e. correct actions (Fig. 5 ). She was also encouraged to think aloud while performing the task. No manual or feedback was provided.

). She was also encouraged to think aloud while performing the task. No manual or feedback was provided.

|

Fig. (5) Participant in the test room, and test coordinator in the observation room. |

The test was performed in an hour, including pre and post questionnaires. These questionnaires were developed for the test and inline with the methodology of Dumas and Redish [12Dumas J, Redish J. A practical guide to usability testing In: Exeter, UK: Intellect Books. 1999; p. 22 et sqq.]. Two assistant nurses who were both domain experts and experienced users of the system were invited to validate all statements in the questionnaires and to tune the tasks before the test procedure started. When the invited nurses considered the tasks realistic for homecare work and understandable for their colleagues, they carried out a pilot test.

Tasks to Meet Homecare Goals

Goals within the homecare practice were elicited in a context of use analysis [15Maguire M. Context of use within usability activities Int J Hum Comput Stud 2001; 55(4): 453-83.]. These goals were fundamental to all daily activities performed to provide quality homecare. Following the normal work flow for the HHS staff, 14 realistic task scenarios (Table 1) were created and categorized according to the main goals:

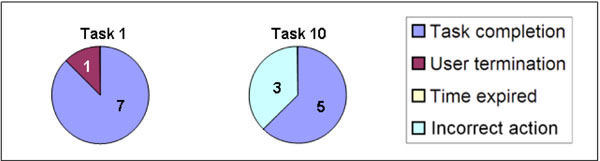

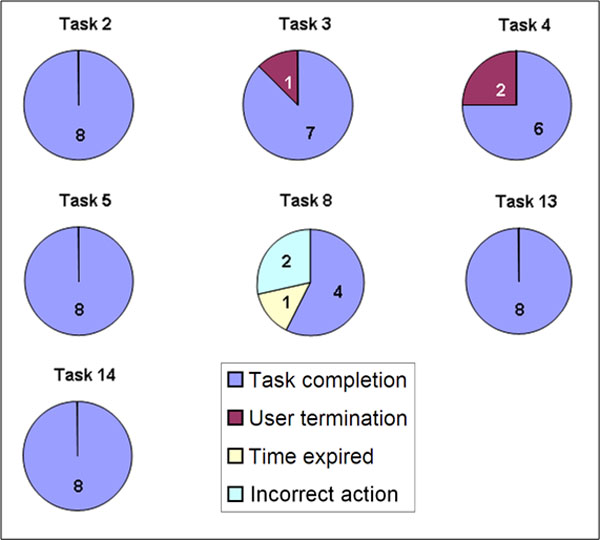

- HHS need to be able to find practical information about a patient (2 tasks: 1, 10),

- HHS need to be able to find health-related information about a patient (7 tasks: 2, 3, 4, 5, 8, 13, 14), and

- HHS need to be able to document new information (3 tasks: 6, 7, 11).

Two navigation tasks (9 and 12) are labeled “N”. Similar tasks were used during education and during the run-through session short before the test.

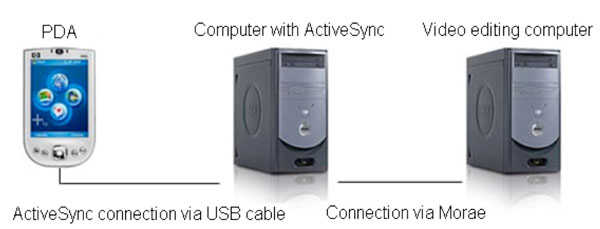

Laboratory Setup

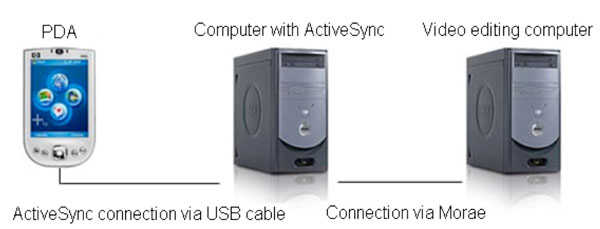

Usage of the application on the PDA was recorded in several ways. All interactions on the PDA screen were digitally recorded on a computer in the observation room (Fig. 6 ). The transfer application used on the PDA was Active-Sync Remote Display v.2.03. An external camera recorded the PDA and the physical interactions as well as when the user changed tasks. These tasks were printed on cards next to the PDA (Fig. 5

). The transfer application used on the PDA was Active-Sync Remote Display v.2.03. An external camera recorded the PDA and the physical interactions as well as when the user changed tasks. These tasks were printed on cards next to the PDA (Fig. 5 , left side). In the observation room, the observers used paper-notes to record the start and stop time for each task (Fig. 5

, left side). In the observation room, the observers used paper-notes to record the start and stop time for each task (Fig. 5 , right side). This helped decoding of the recorded videos. Software used for recording the test and decoding the video was Morae v.1.2. The computer in the observation room was running an Intel Pentium4 3Ghz Hyper-Threading processor with 1Gb RAM memory together with an ATI Radeon X700 graphics card. The operating system was Windows XP Pro. The PDA used was an HP iPAQ H6300 Series with operating system Pocket PC 2003. The test team consisted of the first author and two M. Sc students and the test was carried out in April 2007.

, right side). This helped decoding of the recorded videos. Software used for recording the test and decoding the video was Morae v.1.2. The computer in the observation room was running an Intel Pentium4 3Ghz Hyper-Threading processor with 1Gb RAM memory together with an ATI Radeon X700 graphics card. The operating system was Windows XP Pro. The PDA used was an HP iPAQ H6300 Series with operating system Pocket PC 2003. The test team consisted of the first author and two M. Sc students and the test was carried out in April 2007.

|

Fig (6) Handheld usability laboratory set-up. |

Measures of Effectiveness

Effectiveness was measured as the number of users who completed the tasks. A task was completed when the participant performed a correct action, i.e. reached the goal within a pre-defined time limit. This limit was individual for each task and reached when the test participant consumed twice as much time as the experienced users. This definition was based on the limited resources in homecare, which constrains the time the staff can spend using ICT. Task failures were calculated as incorrect actions; exceeding the time limit and/or user termination. For a task to be considered effective the total completion rate needed to be at least six of eight participants.

Discovering Potential Usability Problems

Tasks with low effectiveness and/or low productivity were identified as tasks with potential usability problems. Productivity was measured in terms of the productive time spent to reach the goal of a task. Time spent was divided in different phases, e.g. when the user starts to be productive (follows the pre-defined correct itinerary), or unproductive (the user leaves the pre-defined itinerary). The decoding consisted in setting productivity time stamps when the user entered and ended each phase, or during “computer processing time” (when the PDA processes a database task making the user wait). Productivity was only analyzed within an effective task, i.e. a task that was completed.

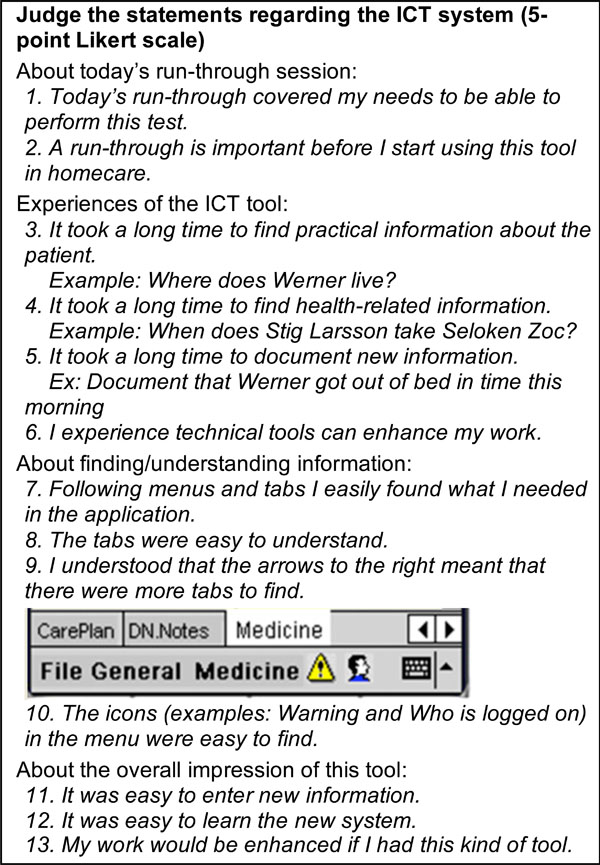

User Satisfaction

After spending time with the prototype, the participants communicated their opinions of the usage in a satisfaction questionnaire. They responded to 13 statements and rated their satisfaction using a 5-point Likert scale [16Trochim WMK. Research Methods Knowledge Base 2e. Atomic Dog Publishing 2006.]. This scale ranged from strongly agree to strongly disagree and was associated with numerical values (higher scores correspond to a more desirable state) to summarize the subjective opinions by their medians. Due to the high number of temporary staff, the system must not only be easy to use, but also easy to learn, enabling new users to commence working with the system quickly. Therefore “learnability” was a major requirement as well as the perceived time to fulfill a task which must be “short”, also measured by the questionnaire (Fig. 7 ).

).

|

Fig. (7) User satisfaction questionnaire: Statements regarding the ICT tool and the application. |

RESULTS AND ANALYSIS

The study purpose guides the presentation of results and analyses made. A participant analysis of the pre-test questionnaire is followed by sections where system effectiveness, potential usability problems and user satisfaction results are presented and analyzed.

Pre-Test Results: Participant Analysis

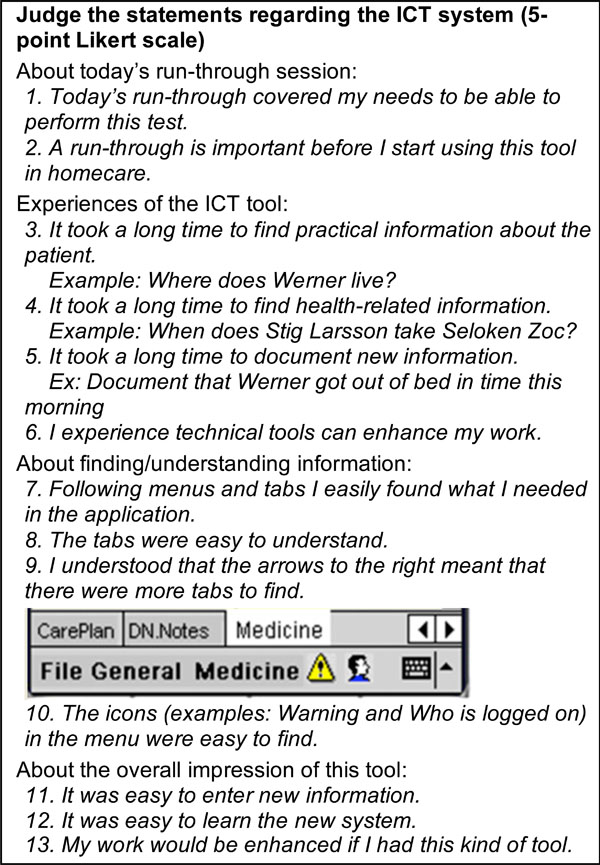

Eight participants matching the participant profile were recruited. These participants were from a Swedish HHS district other than the one where VHR was originally developed. These participants had no knowledge of the VHR system or of the patients they should be visiting during the test. Prior to the test, a conventional pre-test questionnaire, gathered further information about each participant; age, years in the profession and domain experience, as well as use of computers and handheld electronic devices.

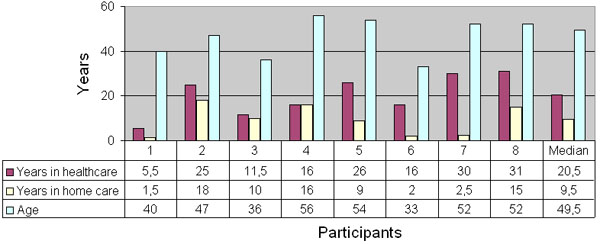

All participants were assistant nurses; four of eight had 20 years or more of healthcare work experience; four of eight had 10 years experience of work in homecare, without correlation (Fig. 8 ). Four participants were 50 years old or more, no participants were younger than 30 years old.

). Four participants were 50 years old or more, no participants were younger than 30 years old.

|

Fig. (8) Participants’ age and number of years in healthcare and in homecare. |

Results from the pre-test questionnaire revealed that all participants frequently used computers at work; 5 used it 3-4 days a week, and 3 used it everyday. All but one also frequently used a computer at home; 5 used it 3-4 days a week and 2 used it everyday at home. One used a computer at home once a week. All participants used the computer for searching/surfing the Internet, e-mail, chat, games, etc. None of the participants had prior experience of handheld computers.

Effectiveness Results

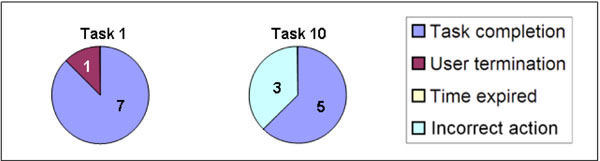

When the user succeeds in reaching the goal, the task is completed and considered effective. On the other hand, a failure could be due to multiple reasons. For example, an incorrect action could have been caused by distraction and not specifically lack of knowledge or skill. It was therefore important to separately analyze each failure divided into sub-definitions (user termination, time expired and incorrect action) and to group all participant responses according to the goals (I) Finding practical information, (II) Finding health-related information, and (III) Documenting new information (Figs. 9 -11

-11 ). Two tasks were navigation tasks where the participants were instructed to switch from one patient to another. These tasks were completed by all eight (Fig. 12

). Two tasks were navigation tasks where the participants were instructed to switch from one patient to another. These tasks were completed by all eight (Fig. 12 ). Where several participants made the same error, this indicated there was a usability problem, as in task 6.

). Where several participants made the same error, this indicated there was a usability problem, as in task 6.

|

Fig. (12) Effectiveness in user navigation: Switch from one patient to another. Both task 9 and task 12 were completed by all 8 participants. |

Task results from tasks 7, 8, and 11 are based on 7 participants; two participants got feedback from the test manager after the time had expired on task 6 respectively task 10. Consequently these participants learnt how to fulfill task 7 respectively task 11. This affected the data and we thus chose not to consider their responses. Task 7 and 8 were connected and task 8 could be completed only after successful completion of task 7; therefore task 8 was also omitted for this participant. In total 10 of 14 tasks were considered effective, that is, the participants succeeded in using the prototype to accomplish the assigned task and consequently they reached the HHS main goals.

Seven of 14 tasks were completed without failure by all participants. Of the remaining seven tasks, a total of 20 failures in 109 trials (18.35%) were noted. The main problems arose when documenting new information, (10 failures in 22 trials, the majority occurring in task 6). Finding health-related information was performed in seven tasks and six failures in 55 trials were recorded. Four failures in 16 trials of two tasks relating to finding practical information were also noted.

Effectiveness Analysis

The analysis is performed with regard to the three goals in homecare, where “finding information” contains both health-related information and practical information.

Finding Information

A detailed analysis of the data revealed that one participant failed early in the test, on task 1, Find Werner’s address, and task 3, find out if Werner is allergic to something, probably caused by distraction (reason for the failure was user termination). The other participants completed all initial tasks, apart from number 4, Look up the care contract, where two participants chose not to search for the information.

Task 8, Find your last note in the list of daily notes, caused problems for three of seven participants; two gave incorrect answers and the time expired for one participant. Here the participants got lost in the system hierarchy. Consequently, the three levels presented in the system may have to be redesigned.

Regarding task 10, finding the district nurse’s name and address, consistency between the content in tabs and menu has to be improved. Here the participants seem to become confused as to whether to use the tabs or the menu. This confusion resulted in three participants exceeding the time limit. Of the tasks regarding how to find information, task 10 (practical information) and task 8 (health-related information) were considered not effective and need further exploration.

Documenting New Information

Task 6, Use the fastest way to document..., was problematic. Only one participant completed the task, three participants acted incorrectly according to the pre-defined itinerary and four participants failed due to the time constraint. This was probably due to the task formulation in combination with a system design flaw. Indeed, in the current VHR system there are two ways of documenting a “performed intervention” of the care plan. The system supports both a writing modefor daily notes and that a performed intervention can be ticked off on thecare plan tab. The latter is faster and we expected the participants to choose this way. However, it appears that participants got confused by the expression “the fastest way” in the task instructions and three of them chose to document a daily note. Four participants had trouble finding the “correct itinerary” and spent time merely searching the application for this view. In these cases the maximum time was reached (180 seconds).

The design flaw was obvious; if two ways of documenting an intervention (normal vs accelerated) are available, the interaction has to start on the same tab. This tab should offer either the quick tick-box documentation or to write a complete daily note. Moreover, poor task formulation also caused problems in the analysis - the “correct” itinerary was omitted, but the participants managed to document the intervention regardless. We chose to consider the task as not effective, and a new design for the documenting mode is being prepared.

As explained earlier, tasks 7, 8 and 11 only includes seven participants. Task 7 caused problems for three of the seven remaining participants. As in task 6, two of these failures were a result of incorrect actions, that is, the participants documented a daily note instead of ticking off the care plan’s “performed intervention”. Tasks 6 and 7 were not effective, and thus the writing mode has to be improved to avoid user failures.

Results: Potential Usability Problems

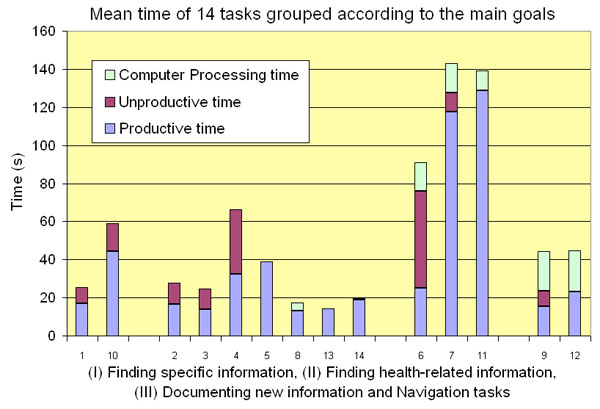

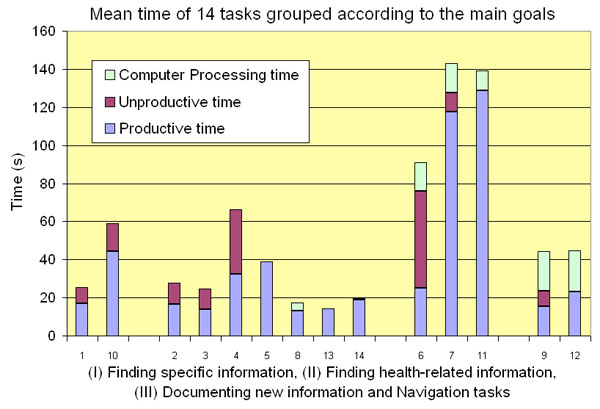

To identify potential usability problems the efficiency of each task was measured. Total time spent in a completed task was divided into three states: productive time; unproductive time; and computer processing time, as defined in the section Materials and Methodology. Where the participants deviated from the pre-defined and most direct route in achieving the task goal, we noted a potential usability problem and analyzed why the participant was unproductive.

In Fig. (13 ) mean time is divided into the three states and grouped according to the homecare goals. Each task needed to be accomplished within a pre-defined time in order to be efficient. For each task mean and median time was calculated (Table 2).

) mean time is divided into the three states and grouped according to the homecare goals. Each task needed to be accomplished within a pre-defined time in order to be efficient. For each task mean and median time was calculated (Table 2).

|

Fig. (13) Mean time for each task, based on the participants that completed the task. |

Analysis of Potential Usability Problems

Task completion time differed between the tasks. Mean times of tasks which related to writing new information (tasks 7, 11) were approximately 2 minutes, while mean times of tasks relating to health-related information searching were between 20 and 40 seconds (tasks 2, 3, 4, 5, 8, 13, 14). Tasks where the participants switched from one patient to another resulted in a 20-seconds computer processing time (tasks 9, 12). Saving new information (tasks 6, 7, 11) also resulted in a period of processing time.

Finding Information

In six tasks whose objective was to find information, the majority of the participants completed the tasks in less than 30 seconds, t1:6/7; t2:5/8; t3:6/7; t8:4/4; t13:8/8; t14:7/8. This was as quick as the experienced users.

Task 5, finding the goals in Werner’s care plan, took slightly longer. Five of eight participants found the correct information in less than 40 seconds. Two of eight participants found it in 40-60s, and one participant in 60-80s (mean time=39s). Task 10, finding name and phone number of the responsible district nurse, also took slightly longer. Median for this task was 50s and mean time was 36,75s. It is also important to note that three users exceeded the time limit, probably due to difficulties in finding the information in the menu. Participants may have been confused as previous tasks involved navigation in the tabs, not the menus. Moreover, the label of the menu containing the information violated the heuristic rule of “match between system and real world” [17Nielsen J. Heuristic evaluation In: Nielsen J, Mack RL, Eds. Usability Inspection Methods. NY: John Wiley & Sons 1994.]. Post test results indicate that this menu was too generic; “General info” did not assist users in identifying the actual content of this menu. In fact, “General Info” could be understood as containing information about the system and not about the patient. Renaming the menu is advisable. The design idea of storing generic information in the menu and health-related information on the tabs was not obvious to the participants. The system should support a patient centered view and clearly indicate that patient specific information is central, and to be found on the tabs. In contrast, the framework for treating the patient, such as contact information to other care professionals, is more peripheral and could therefore be kept in the menus.

Task 4, looking up the care contract, caused more unproductive time than productive for three participants, while trying to find correct information. Two participants did not even search for the care contract. This could be due to the fact that these participants did not use the same terminology for “care contracts” as the district where the system was developed. It was observed that participants with a lot of unproductive time, had trouble understanding the tab navigation; especially how many tabs there were and where the user could find them. The tabs followed conventional PocketPC environment and were placed on a scroll bar and there was no marker, other than the navigation arrows, to indicate that the tabs could continue to the left (Fig. 14 ). It is also interesting to note that 3/8 participants caused all unproductive time in task 2.

). It is also interesting to note that 3/8 participants caused all unproductive time in task 2.

|

Fig. (14) Users need to conceptualize the navigation arrows on the right hand side of the tab bar. |

Documenting New Information

A stated requirement in the context of use-analysis was that “tasks needed to be accomplished in a short time”. To document a note, two minutes were considered feasible. Almost only productive and computer processing time was recorded for the four participants completing task 7 and the seven participants (of seven) completing task 11. Although all seven participants apparently knew how to write a note, five participants still needed more than two minutes to fully document one daily note. When planning the task by measuring the experienced users’ time spent, we included time to think and formulate a new note within the two minutes. When analyzing time spent, the differences were largely due to time required for participants to put their thoughts into words. The experienced users subsequently confirmed that some notes do take a considerable time to draft and that documenting daily notes varies in time even between experienced users. If thinking creates the difference between participants, it probably makes little difference whether a PDA or a pen is used, consequently two minutes should be a feasible mean time to accomplish the task.

When observing the participants and decoding the recordings we noticed that although the participants were novices on the PDA, they were fast in actual input of text. Surprisingly, there were only small differences in input efficiency for novices compared to experienced users. For example, two participants used the stylus very quickly and finished task 11 in less than 90 seconds. This was as fast as the experienced users.

Navigation Tasks

All participants completed the navigation tasks, numbers 9 and 12. The first time they switched to another patient, there was some unproductive time noted. The next time the participants switched between patients, they had learnt the function and no unproductive time was noted in task 12; all eight completed the task successfully.

Due to heavy patient data transfer on a slow mobile platform, a computer processing time of 20 seconds appeared in these tasks. The participants felt frustrated with this processing time, particularly as the time required to complete the tasks was less than the computer processing time. However, in real work, most of the time users can switch patients while doing other non-ICT related tasks. New and more powerful devices will also reduce the processing time.

User Satisfaction Results

All 8 participants completed a user satisfaction questionnaire with focus on their individual interaction with the VHR. The statements regarded the demo session; learnability and ease of use in relation to interaction with the tool and finding and understanding information. The subjective opinion about technical tools in general and the overall impression of this particular tool was also examined (Table 3). The participant group was too small to estimate population characteristics. Thus, regarding e.g. perceived time (long or short) for the system to respond on user interactions, the quantified subjective answers should only be considered as indicators of possible problems with the efficiency of the system.

User Satisfaction Analysis

The post-test questionnaire captured the participants’ opinions regarding the homecare goals and their fulfillment. Quantitative measures as effectiveness and potential usability problems were compared to the participants’ qualitative answers. For example, the homecare requirement “each task needs to be accomplished within a limited timeframe” was analyzed using actualtime spent, a component for identifying potential usability problems together with the participants’ opinions about the perceived time to accomplish a task. Another requirement, “the system must be easy to learn”, was both measured in the user satisfaction questionnaire and as an effect of performance time; if you are effective and efficient in your work after a limited education and with little experience of the application, then the system is easy to learn. This analysis also included how easily users found practical and health-related information in the system, and how easily they documented new notes.

Finding Information

It was not perceived to take a long time to find information in the system. The participants rated the perceived time to find health-related information (median=4) as shorter than to find practical information (median=3). These opinions were in line with the usability measurements.

Statement “Following menus and tabs, I easily found what I needed in the application” received a median of 3. This was contrasted by the responses to the statement “The tabs were easy to understand”, that resulted in a median of 4. This can be interpreted as the participants preferred working with the tabs. On the other hand, icons on the menu seemed to be easy to understand, with a median of 4. In short, visualization of how to navigate and find practical information has to improve, possibly by a redesign using more icons on the menu bar and tabs containing practical information.

Documenting New Information

Regarding perceived time to write new information four participants replied that itneither took a long, nor a short time to document new information. Consequently there is also scope for improvement in this area.

The Overall Impression

Regarding learnability and the overall impression of the tool, the participants were positive. Three strongly agreed that the system was easy to learn and two partly agreed. The statement on entering new information assessed whether the tool (the PDA application and the stylus) was adequate for novice users. The majority agreed or strongly agreed resulting in a median of 4, which coincides with the observations made regarding the participants input speed. As the participants were all novice users, statements regarding finding and understanding information and system navigation components (menus, tabs, icons and arrows) also reflect learnability. Responses to these statements varied from a median of 3 to 5, resulting in an overall positive rating of how the user interface displays information and visualizes navigation. Graphical components (icons, arrows and the tab menu) seem to be easy for novices to understand and use. In contrast, the textual menu is more difficult to use; this appears to be due to the awkward labeling of the menu items. This has to be improved.

Finally, two identical statements regarding use of ICT tools to enhance homecare work, included in both the pre- and post-test questionnaires, revealed changed opinions. After the education but before the test, six participants partly agreed on the statement Iexperience that technical tools can enhance my work. After testing the VHR the participants were more confident of using the PDA and as such they felt confident that such a tool could be successfully used in their homecare district. The same statement now increased its median of one point, to 5. The statement my work would be enhanced if I had this kind of tool received an equally high median.

To summarize, the subjective opinions are in line with the objective measures about the learnability of the application; the novices rapidly learnt how to use both the device and the application. Effectiveness and efficiency increased during the test and in the last four tasks no unproductive time was recorded. Education and usage can always increase performance; however, the participants were overall satisfied with the application.

DISCUSSION AND FUTURE WORK

This study explored how effective a new mobile healthcare application was for experienced homecare staff based on their performance of daily activities (reaching the homecare goals I-III). Different factors affected the test performance; here we discuss implications of the method, as well as social factors influencing the effectiveness of the application, and potential usability problems.

Methodological Considerations

Although practical use of usability evaluations is still often lacking in health informatics in general [18Kushniruk A. Evaluation in the design of health information systems: application of approaches emerging from usability engineering Comput Biol Med 2002; 32(3): 141-9., 19Ammenwerth E, Brender J, Nykanen P, Prokosch H-U, Rigby M, Talmon J. Visions and strategies to improve evaluation of health information systems: Reflections and lessons based on the HIS-EVAL workshop in Innsbruck Int J Med Inform 2004; 73(6): 479-91.] and homecare in particular [11Koch S. Home telehealth - Current state and future trends Int J Med Inform 2006; 75(8): 565-76.] we here reflect on this particular set-up. A drawback of this usability lab test approach was that even though a mobile system was tested, the study did not consider interaction with the device while participants were in motion. However, our previous research shows that when homecare staff interact with the electronic devices in the field, they normally either sit down in a patient’s home, or stand still just outside the patient’s door. In fact, interaction is seldom performed when the users are in motion.

When an application is tested in a usability lab, there is not always the opportunity to involve real user groups; often students or groups similar to the real user group participate. In this case, great care was taken to recruit participants who actually were experienced homecare staff and potential system users. According to Dumas and Redish [12Dumas J, Redish J. A practical guide to usability testing In: Exeter, UK: Intellect Books. 1999; p. 22 et sqq.], in a tentative study, the participant number should not be less than eight. Aware of the volunteer effect, where volunteers possibly perform better than others, we were nevertheless satisfied when eight real homecare professionals volunteered for the test. At this stage, randomized samples of users or larger numbers of test participants were considered unnecessary. Future evaluations will however involve a larger number of participants and/or possibly be carried out in a real homecare environment.

The test session was planned together with experienced users from the district where the system once was developed. Although they performed pilot tests and validated the tasks, there were some terminology problems occurring during the test, e.g. in task 6. These could possibly have been revealed if a pre-test had been performed by a novice user. This reflection also regards the post-test questionnaire where statements 3-5 had reversed values, which were complicated to understand for the participants and thus we recommend not to use reversed statements in questionnaires.

The education of the participants was planned with managers in homecare and performed at one occasion. Time spent between education and test differed between two and four weeks for the participants, and they had no access to the handheld electronic devices in between. Evidently, some participants complained they did not remember the education. This study did not measure how easily the system can be used after a period of absence; however, we suggest there is a strong relationship to the time of education and the user’s everyday familiarity with the system. If two hours is the maximum time to introduce novice users to an ICT tool in homecare, then the education needs to be held in close proximity to the first day of work. A future study could investigate whether it is economically feasible to update the system or to amend the content and/or presentation of the system education.

Contextual Factors Affect Transferability

The VHR was originally developed for a specific homecare district and used by the HHS there. We were nevertheless interested in transferring the application to a different homecare district and a new user group. In theory, the same type of work is performed, that is, providing homecare for elderly patients. The two user groups did not differ drastically; assistant nurses with long experience of working in homecare were present in both groups. However, in reality, there were some differences, as explained below, and these affected the test results. If left without consideration, they could negatively effect the future implementation of the system.

In Sweden to date, there is no standardized terminology for documentation used by HHS. Terminologies differ between municipalities, or even between homecare teams in the same municipality. This occasionally made it difficult for the test participants to find the right information, as they were not familiar with some of the terms used. In task 4, look up the care contract, several users had difficulty finding the information and they stated this difficulty was due to differences in terminology.

Differences in work practices were also present. Care plans were used by “the original” homecare team to organize and plan the continuity of care required on a long-term basis for the patient. The care plan was also used as basis for documenting when a planned intervention had been performed, in order to provide data for follow-up. The participants in the test were unaccustomed to using a care plan at all and therefore had trouble grasping the full meaning of this concept.

Some of these differences may also relate to the two homecare teams’ different experience of working with documentation. In the original care team, the OLD@HOME project had triggered an interest in documentation issues, and during project time the HHS team was given room for reflection on documentation practices. Additional education with respect to care documentation had also been provided for that HHS team. The second team, that is the test participants, consisted of highly experienced healthcare professionals who were however less experienced in documenting the care provided. This may have affected the test results regarding system efficiency. Possibly we should have considered the differences in documentation experiences when limiting the task time for writing notes. However, the requirement from the HHS was to be able to document a note in a couple of minutes, thus the maximum time was accordingly set to 2 minutes.

These participants were to a higher extent more experienced in using computers than expected. Though they lacked experience of handheld ICT tools like the PDA, the novices quickly gained input skills. Surprisingly, there were only minor differences in input efficiency when compared to the experienced users. The tool itself was not an impediment to these participants and some of them also stated that the application could be directly inserted into their work practices.

Despite this acknowledgement, it is evident that specific contextual considerations are required before the VHR will be ready for implementation in a new homecare area. Further research areas could be to develop an evaluation method that joins lab results with subsequent field observations focusing on differences in the respective work practices. Joint results would improve the developers’ ability to adapt the system to the new context.

Usability Problems Affect Performance

During the test, previously unknown problems were identified. The design of the VHR relied on both tabs and menus; general information was only to be found in a menu, whereas all individual health information was found in tabs. Despite the developers’ rationale for placing different information in tabs and menus, the participants had trouble conceptualizing the difference. The test demonstrated that the mix between menus and tabs made it difficult for novice users to find information. In the user satisfaction questionnaire the users preferred tabs to menus, consequently tabs should be the primary interaction component and, if needed, menus should only hold application information, such as File, Quit and User Status.

Another design flaw was the display of the three levels of interaction. The system contains a reading mode providing overview, where items are shown in lists, a detailed reading mode, where one item from a list is presented in detail, and a writing mode, which is accessible from the detailed reading mode. The novice users had difficulties identifying their current level, and how to navigate onwards from this point. According to Nielsen’s guide lines [17Nielsen J. Heuristic evaluation In: Nielsen J, Mack RL, Eds. Usability Inspection Methods. NY: John Wiley & Sons 1994.], you should always inform the user of current position and possible ways to continue. A potential solution could be to provide linked and clickable navigation icons (arrows) in the title bar.

In several of the tasks, extensive computer processing times were measured. In real work situations, the HHS staff make use of this waiting time by, for instance, opening the patient’s file before reaching his/her home. This enables the application database to process while the HHS is still approaching the patient’s home. In the lab, focus was on the PDA only, making the sometimes slower computer processing time quite frustrating. Therefore, long processing times were identified as a usability problem which future versions will need to address.

Despite the uncovered potential usability problems and the differences in context of use, the participants were overall positive after testing the system. Being real homecare staff, there are some grounds to claim that the results generated can be regarded as important indicators of possible problems with the effectiveness of the system. On the other hand, they acknowledged the user centred system development method used when developing the VHR. They expressed that the VHR seemed useful, that it corresponded to their daily working routines and was developed to meet their needs in a mobile environment. Additionally, they claimed it would indeed increase their sense of safety to have access to relevant information in their daily work.

Even though theory suggests that systems cannot be adequately evaluated by their developers [20Sommerville I, Sawyer P. Viewpoints: principles, problems and a practical approach to requirements engineering Ann Software Eng 1997; 3: 101-30.], we still found it valuable to observe the participants using the VHR. Our observations led to additional ideas for system improvements. These ideas are now being fed into the iterative development process and will appear in the next VHR version.

CONCLUSION

In a usability lab we explored the usability in terms of effectiveness, user satisfaction and potential usability problems of a new mobile homecare application when transferred to a new user group. Eight experienced home help service (HHS) staff without prior knowledge of the system except for a limited period of instruction tested the VHR. The participant analysis revealed that the HHS personnel frequently used computers and were more computer experienced than what was expected.

The subjective analysis indicated that the participants were overall satisfied with the application; they felt they only encountered some minor problems with the application. The analysis of quantitative data discovered that a majority of the tasks (10/14) were considered effective; all 8 participants carried out 7 of 14 tasks without failure. Moreover, the novice users handled the input facility as quick as the experienced users. The results of this usability evaluation can be used as input for further system development and enhancement.

Although work situations and user groups are similar for HHS districts responsible for care of elderly citizens in Sweden, contextual factors were the main impediment for transferring the system to a new district. If left without consideration, these issues could hamper the work of a different homecare district, should the VHR be implemented in that region. Consequently, in current state, the VHR should not be transferred to another district, unless by means of a process of careful interpretation and translation. Field observations and similar studies have to be performed to capture contextual differences in the homecare district where the system is to be implemented.

In conclusion, usability evaluations should be used more frequently in health informatics research and system development as they convey necessary knowledge about issues that need to be corrected before systems are used routinely. However, this study shows that contextual differences such as terminology use and documentation practices affected the test results and hindered the performance more than the uncovered usability problems. Therefore, further research should also focus on system transferability, especially methods for system deployment considering contextual differences.

ACKNOWLEDGEMENTS

This study was financed by Centre for eHealth, Trygghetsfonden and the clinical and industrial partners of OLD@HOME. The authors wish to thank the experienced users Ingela Möller and Johanna Böhlin from the HHS in Hudiksvall for conducting the education and the HHS group of the Municipality of Tierp participating in the usability lab study, as well as the M.Sc. students Kristian Kurki and Christer Hellström for valuable aid in data acquisition and analysis made in the lab.

REFERENCES

| [1] | Koch S, Hägglund M, Scandurra I, Moström D. Old@Home - Technical support for mobile closecare, Final report 2005 Report No.VR 2005: 14 http:// www.medsci.uu.se/mie/project/closecare/vr-05-14.pdf 2005. last visited June 19 |

| [2] | Schuler D, Namioka A, Eds. Participatory Design - principles and practices. New Jersey: Lawrence Erlbaum Associates 1993. |

| [3] | Greenbaum J, Kyng M. Introduction: Situated Design In: Greenbaum JKM, Ed. Design at work: Cooperative design of computer systems. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc 1992; pp. 3-24. |

| [4] | Luff P, Heath C. Mobility in collaboration In: Poltrock S, Grudin J, Eds. In: Proceedings of the 1998 ACM Conference on Computer Supported Cooperative Work; 1998; November 14 - 18, 1998; Seattle Washington, United States. New York: ACM Press 1998; pp. 305-14. |

| [5] | Heath C, Luff P. Documents and professional practice: “bad” organisational reasons for “good” clinical records In: Ackerman MS, Ed. In: Proceedings of the 1996 ACM Conference on Computer Supported Cooperative Work 1996; November 16 - 20, 1996; Boston Massachusetts, United States. ACM Press 1996; pp. 354-63. |

| [6] | Hardstone G, Hartswood M, Procter R, Slack R, Voss A, Rees G. Supporting informality: team working and integrated care records. Proceedings of the 2004 ACM Conference on Computer Supported Cooperative Work 2004; November 06 - 10, 2004; Chicago Illinois, USA. ACM Press 2004; pp. 142-51. |

| [7] | Scandurra I, Hägglund M, Koch S. Visualisation and interaction design solutions to address specific demands in Shared Home Care Stud Health Technol Inform 2006; 124: 71-6. |

| [8] | Kjeldskov J, Graham C. A review of mobile HCI research methods In: Chittaro L, Ed. Mobile HCI. Udine, Italy: Springer-Verlag GmbH 2003; pp. 317-5. |

| [9] | Boivie I. A Fine Balance - addressing usability and users' needs in the development of IT systems for the workplace http: //publications.uu.se/abstract.xsql?.dbid=5947: Uppsala University 2008. last visited June 19 |

| [10] | ISO 9241-11, Ergonomic requirements for office work with visual display terminals, Part 11: Guidance on usability Geneva: International Organisation for Standardization 1998. |

| [11] | Koch S. Home telehealth - Current state and future trends Int J Med Inform 2006; 75(8): 565-76. |

| [12] | Dumas J, Redish J. A practical guide to usability testing In: Exeter, UK: Intellect Books. 1999; p. 22 et sqq. |

| [13] | Kushniruk AW, Patel VL. Cognitive and usability engineering methods for the evaluation of clinical information systems J Biomed Inform 2004; 37(1): 56-76. |

| [14] | Beuscart-Zephir MC, Brender J, Beuscart R, Menager-Depriester I. Cognitive evaluation: How to assess the usability of information technology in healthcare Comput Methods Programs Biomed 1997; 54(1-2): 19-28. |

| [15] | Maguire M. Context of use within usability activities Int J Hum Comput Stud 2001; 55(4): 453-83. |

| [16] | Trochim WMK. Research Methods Knowledge Base 2e. Atomic Dog Publishing 2006. |

| [17] | Nielsen J. Heuristic evaluation In: Nielsen J, Mack RL, Eds. Usability Inspection Methods. NY: John Wiley & Sons 1994. |

| [18] | Kushniruk A. Evaluation in the design of health information systems: application of approaches emerging from usability engineering Comput Biol Med 2002; 32(3): 141-9. |

| [19] | Ammenwerth E, Brender J, Nykanen P, Prokosch H-U, Rigby M, Talmon J. Visions and strategies to improve evaluation of health information systems: Reflections and lessons based on the HIS-EVAL workshop in Innsbruck Int J Med Inform 2004; 73(6): 479-91. |

| [20] | Sommerville I, Sawyer P. Viewpoints: principles, problems and a practical approach to requirements engineering Ann Software Eng 1997; 3: 101-30. |